Robot cars and the fear gap

Sarah Connor told me that robots are going to kill us all, but I’m weirdly excited about climbing inside one and letting it drive me around . I’m an exception; last year, I wrote about the way broader media and community reactions to autonomous vehicles will take form:

Humans are responsible for the vast majority of motor vehicle deaths and injuries. In 2010 in Australia, there were 1,248 fatalities associated with motor vehicles — 75% to 90% of these were caused by human error”

“The [media coverage] pattern is simple: the magnitude of a risk is misperceived, individuals and media outlets react, and politicians demand greater regulation”

This tragic trajectory is taking shape. No technology will result in the complete eradication of vehicular fatalities, but considering the major role of human error in car accidents, computer assistance and eventual control should lead to a serious reduction of death and injury. Currently, this doesn’t matter. We’re set to see a period of skewed perception of risk, thanks to information presented in a way maximises skew, and minimises math.

Recently, Tesla enthusiast Joshua Brown was killed in a horrible accident on an American highway, in which a Model S on ‘autopilot’ mode failed to brake after a truck turned into the path of the vehicle. Tesla said, in a blog post:

“The customer who died in this crash had a loving family and we are beyond saddened by their loss. He was a friend to Tesla and the broader EV community, a person who spent his life focused on innovation and the promise of technology and who believed strongly in Tesla’s mission. We would like to extend our deepest sympathies to his family and friends.”

Since then, a variety of articles have criticised Tesla and focused heavily on the incident. A context-free and salient focus on dramatic events is a key component in the exaggeration of risk, something I focused on in my predictions.

Tesla CEO Elon Musk has asserted, in blog posts and on Twitter, that though the accident is indeed a tragedy, a double standard exists – the Model S has an excellent safety record, and autonomous vehicle technology is something that will almost certainly lead to a serious reduction in road fatalities.

You can feel Musk’s frustration when you scroll through his replies (and when you read their follow up blog post). This has led to criticism, of him and the company, from a some ‘Crisis Communications’ experts:

“According to Bernstein, resorting – as Musk did – to statistics to try to put an accident or malfunction which resulted in a death into a wider context, however well-meaning, was ill-judged. “I haven’t seen anybody foolish enough to try the statistics approach in a long time,” he said.

Asked what advice he would give to Musk, Bernstein said that he should “take a step back, take a deep breath, and practice delivering a message that communicates compassion, confidence, and competence”.

“And if you can’t do that, keep your mouth shut,” he added”

I’ve seen plenty of instances where people lean too heavily on facts, and disregard community perceptions. I’ve also seen the inverse, where important facts are left out in favour of eagerly prodding community reactions.

The important thing here is that Musk’s company is designing and deploying a safety technology into modern cars. This runs parallel to his efforts to transition vehicles away from internal combustion engines and towards electric motors – in essence, another safety feature removing the dangers created by sole reliance on carbon-intensive fuels.

In this context, statistics matter – the crisis communications experts seem only to value community reactions, and fully disregard the question of whether this technology actually works to reduce risk. They are being misled by their instincts, and this hostility towards communicating the mathematics of risk will likely to contribute to delays in the deployment of seemingly valuable safety features.

Musk has shunned this evidence-blind approach, hopefully, it’s because deploying this safety feature is more important than bowing to the dominant skew in media coverage of new technology. Ideally, Tesla wouldn’t have to do this – it would be included in media coverage by default.

There’s a historical precedent to this. An article from a 1984 edition of the New York Times outline efforts to encourage seatbelt use, and the dramatic reasons people refuse to use them:

“Researchers say that misperceptions about the uses of seat belts, in addition to the discomfort of wearing them, contribute to drivers’ reluctance to buckle up. There is a persistent myth, for example, that it is safer to be thrown free of the car than to be restrained by a belt. In fact, the chances of being killed in a crash increase 25 times if an occupant flies from the vehicle. Others fear being trapped by a belt if the car catches fire or falls into water”

Emotionally jarring tales of seatbelts taking the lives of occupants by locking them in burning cars, or the strap causing injury, have been around since the 1960s. Unfortunately, some of these are probably based on real occurrences. In the 1930s, The Gippsland Times editorialised on seatbelts:

“That it is deemed necessary for such a safety measure to be even suggested for self protection of drivers and passengers in motor cars is a regrettable reflection of the frailty of human nature and an indictment against bad and careless driving-the cause of nearly all motor accidents.

This prescient piece highlights something important, as remarked upon by a psychologist in the NYT article: “if you ask a room full of people to rate themselves as drivers, they all say they are the top of the distribution”. The Gippsland Times errs in assuming that those who suffer serious accidents are ‘bad’ and ‘careless’ – they’re normal. These are the limits of perception, reaction time, attention and salience. A person safely operating a car is easy. Millions of humans driving for many hours a day on congested and complicated road networks is something else, altogether.

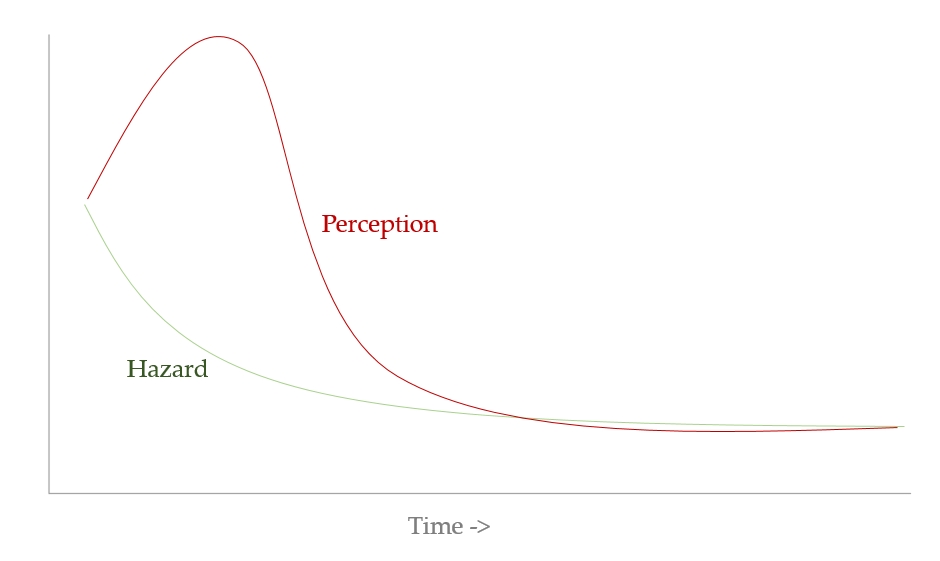

It seems counter-intuitive, but as this safety technology saves an increasing number of lives, we’ll increasingly perceive it as risky and unsafe, and media outlets will continue to maximise this emotionally-salient disconnect:

It would be quite nice to skip this gap altogether, but an inherent feature of new technology is the presentation of familiar risks as new and scary threats. If this ‘Hazard-Perception’ gap emerged around seatbelts, it’s definitely going to emerge around people stepping inside computer cars. We pay a lot of attention to warnings presented in the right way; hence the incentive for click-driven media coverage to widen the gap. But, as Michael Barnard writes at Clean Technica:

“This level of [autonomous technology] sophistication and competence leads to drivers using it. A large part of the reason Tesla is in the spotlight for the fatality is because its driver assist features are so much better than its competitors. Simply put, fatalities are a statistical inevitability of driving, but the other manufacturers’ technology hasn’t been used enough that the sad inevitability has occurred yet”

The ‘crisis communications’ experts aren’t talking about the many thousands of daily deaths from car controlled by human minds because they don’t need to – incumbency has desensitised us to the serious risks that emerge when you blend our brain (with all of its perceptual flaws and cognitive shortcuts) with a sharp, heavy and enormous metal box powered by compressed and refined old, dead plant matter.

In this instance, the approach is clear: don’t take a binary approach to communications. Sensitivity is vital, but the statistical context has to be championed in a way that is not only accessible, but more interesting and compelling than the story of fear that will, by default, dominate coverage of robot cars.

[Edit 13/07/2016 – I didn’t realise it, but I actually watched one of Joshua’s videos a few months ago – in which the collision avoidance software in his car swerves to avoid a truck that changed into his lane without looking]

Excellent piece as always. The hazard to perception graph is pertinent, as is the comparison to seatbelts.

I wrote a bit about what Tesla is saying regarding the statistics here.

https://www.quora.com/Should-Tesla-Autopilot-safety-claim-be-considered-lying-with-statistics/answer/Michael-Barnard-14

It’s worth noting that Tesla’s first notification to the public of this was entitled ‘A Tragic Loss’, not ‘Teslas are statistically safer’. Further, they closed the notice with this:

“The customer who died in this crash had a loving family and we are beyond saddened by their loss. He was a friend to Tesla and the broader EV community, a person who spent his life focused on innovation and the promise of technology and who believed strongly in Tesla’s mission. We would like to extend our deepest sympathies to his family and friends.”

Tesla’s every word is dissected. They aren’t doing as badly as some are suggesting at communicating.

LikeLiked by 4 people

As always, I am greatly bothered by the distinction between “human” driven cars and “computer” driven cars (or worse still, “driverless” cars); and the idea that what we have now are accidents caused by “human error”, whereas in the future we will have failures of automated systems.

It is humans all the way down. Humans design the cars, the roads and the cities in which they are embedded. Humans write the code for computer assisted cars, and when one crashes, it is still every bit (ha ha) as much “human error” as when a completely computerless car crashes. Computer control is still human control.

To be fair, you’ve done a better job than most of talking about this as computer assistance, a safety feature, or simply a substantial change in the risk profile of being in a vehicle on the road.

This might seem like semantics, but it bothers me because as good as this tech seems right now, things will change when commercial pressure becomes a factor. Today, we have individual professional drivers pressured to take drugs and drive dangerously. In a decade, we will have engineering teams pressured to ship early or half-arse new features for the same reasons.

But in the latter case, if we have spent years eliding the human factor from the language we use to talk about cars and their fatalities, we will inevitably see a reduction in the accountability for the loss of human life. And the risk profile will change from what you currently predict it will be, to something only marginally better than what we have now.

LikeLiked by 5 people

The real threat with automated or half-auto computer equipped cars is the 24/7 hacking activities going on around the globe. I mean, these cars are great targets later, on massive scales! And software of those computer driven cars is only as good as the human creators. I mean, look at Microsoft… 🙂

LikeLiked by 2 people

No, the real problem is…what I say! Human-driven cars crash, sure, but they have one big advantage over robot cars – human consciousness. Robots don’t, and never will. They depend on clever algorithms ‘interpreting’ the 3d video and reference maps; but true AI is as far off as ever was. Driverless cars are an accident waiting to happen. Read my blog! https://soothfairy.wordpress.com/2016/05/16/driverless-cars-need-ai/

LikeLiked by 1 person

I feel like new technology is always looked at with a measure of scepticism. Sometimes it’s warranted, but in this case I don’t think it is.

Autonomous vehicles could make our roads safer and more efficient. But people don’t like the idea of handing over something that they have been taught, all their lives, is an incredibly dangerous activity.

I suppose it’s a case of people falling into the what if trap. What if they or their family are the tiny fraction of deaths or injuries that could be caused by autonomous software malfunctioning. The actual numbers, as you’ve described, are ignored.

It’s very difficult to argue with a what if argument. Because it’s already working on the assumption that a terrible event will happen, regardless of how unlikely.

I can see a lot of frustration for musk in the future.

LikeLike

Wow! That is amazing stuff there!

LikeLike

I think the fear stems from the fact that people are bad drivers. They get into situations every day that require them to act and think. They fail to see this situations come up because they’re doing something wrong: following too close, going too fast, fiddling with the radio. They don’t understand that the automated car generally avoids the vast majority of tricky situations, and even when in one, it’s reaction time means it’s not actually a problem for the computer — unlike the lethargic human counterpart.

I’m concerned about people pushing for “moral” controls in the car based on this misperception: if an accident will happen, it should pick the one that kills the least people. I’m trying to convince people that would be a terrible thing, and that adding moral computing would most likely be more dangerous. I don’t want a car that’s pretending to think like a human. I just want it to drive safely.

LikeLike

Media coverage on this drives me nuts. There are real problems to be worked out with self-driving cars, like the ethical dilemmas for the programmers coding the toughest decisions about crashing or killing, but I would already prefer to share the road with self-driving technology than the average driver in the Boston area. There’s a reason they’re called Massholes… I’m more concerned with potential hacking, as the previous comment suggests, than the typical behavior of typical cars in traffic. That’s because I don’t believe technology companies are currently taking security seriously enough, or bearing enough responsibility for obviously lame, but financially expedient, decisions in that area. (I just found your blog and I’m loving it!)

LikeLiked by 1 person

Very informative!

LikeLike

This blog sounds very interesting . ketan, man can you tell me more what this blog is going to cover.

LikeLike

This is a nice reading, but short. Enjoy!

LikeLike

Technology actually is good but lets bear in our minds it is designed by humans. Most accidents are caused by same human limitations and in some cases carelessness and negligence to obey simple rules. I think if we have a very large percentage of humans that are at least 90 percent sane, this will reduce road hazards to a minimal level.

LikeLike

Yeah! The fear gap is real! I had that with cloud computing until I sat down and reviewed the facts! I need to do this with my hesitation with robot cars.

LikeLike

Excellent piece! I’ve always thought that for autonomous cars to become accepted on the road today, we would need to have “HOV” type lanes strictly for those types of cars, separated from all human operated vehicles.

LikeLiked by 1 person